One of the key challenges in fraud detection today is the black box nature of AI-powered detection services. Designed to help teams quickly detect complex risk patterns as they emerge, these tools often return recommendations or risk scores without any information on how they arrived at these verdicts, making it difficult for their users to tune and update the model, collaborate across teams, justify decisions about fraud claims or prove regulatory compliance.

Fortunately, SHAP values — a technique within the emerging field of model explainability in machine learning — is aimed at tackling these problems. This blog will explain what SHAP values are, how they work and how Transmit Security’s Detection and Response Services leverage SHAP values to provide transparency into its AI-powered decisioning logic.

The need for model explainability

Implementing AI without proper human oversight can lead to all kinds of biases and inaccuracies. As a result, it’s imperative that models used to arrive at crucial decisions such as whether to approve a loan, deny a fraud claim or provide access to critical services must provide sufficient transparency into their decisioning mechanisms.

Model explainability is a field of machine learning (ML) that is focused on enabling users to understand the importance of the individual factors that contributed to its decisions. Its use cases include:

- Complying with legal and regulatory requirements

- Improving model performance and customizing for unique business needs

- Facilitating collaboration and communication among stakeholders

- Enhancing accountability and transparency of AI systems to avoid bias

- Enabling auditors or regulators to review and audit AI models

Although model explainability encompasses a range of techniques, one stands out as especially promising in the field of risk, trust, fraud, bots and behavior detection: SHapley Additive exPlanations, or SHAP values.

What are SHAP values and how do they work?

SHAP values are a game-theoretic approach to model explainability that provide transparency into:

- The importance of different features within an ML model

- Whether each feature has a negative or positive impact

- How different features contribute to individual predictions

This last concept differentiates SHAP values from other model explainability techniques, which only provide global explainability, returning aggregated results of how features contribute to predictions within entire datasets. With SHAP values, researchers can also gain local explainability for individual predictions, making them ideal for use in fraud investigations, in addition to enabling models to be quickly tuned when false positives and negatives are detected.

Calculating SHAP values

SHAP is a framework that provides a computationally efficient way to calculate Shapley values. In game theory, Shapley values can be used to illustrate how much each player contributed to a win or loss by calculating the weighted sum of how many points would have been scored with a specific player’s input as opposed to without it and how point values would differ based on all possible subsets of players.

This same logic can be applied to ML algorithms to determine the importance of individual features — measurable characteristics or attributes of the data that the model uses to learn patterns and relationships. If eliminating this feature from the model or changing the weight of its value significantly increases the error rate in the model, it can be considered a key factor in the model’s decision-making process.

How can SHAP values be used in fraud detection?

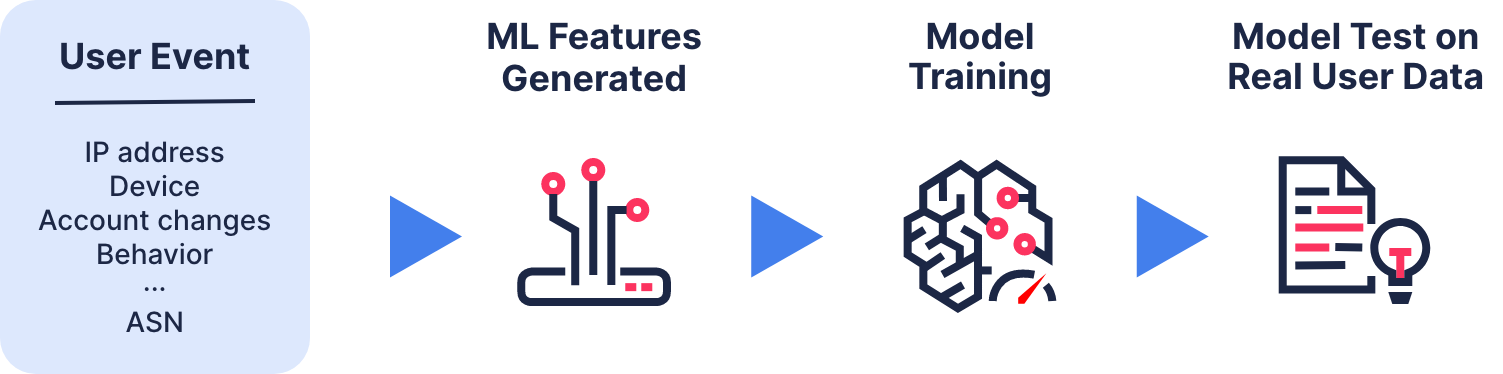

By leveraging threat intelligence to determine emerging attack patterns and techniques, data scientists can select appropriate training data and features to train the model on fraud detection and fine-tune it using feature engineering to obtain the best possible results, as explained in this blog post on machine learning.

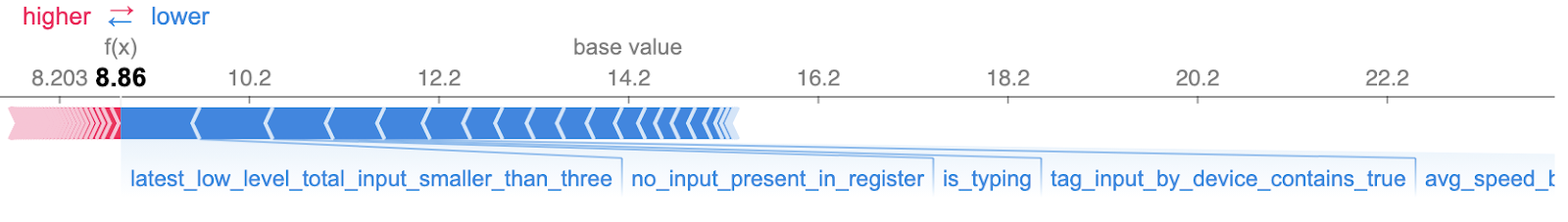

After choosing the best model, a SHAP library can be used to calculate the SHAP values for each feature in the model. Whereas negative values indicate the feature’s global relevance as an indicator of trust, positive values represent global risk signals that contribute to a higher risk score, which can be visualized in a variety of ways that illustrate both the importance and directionality determined by different features.

In addition, results can be retrieved to visualize how each feature contributed to individual predictions.

How do Transmit Security’s Detection and Response Services leverage SHAP values?

Model training and ongoing research

Transmit Security’s Detection and Response Services’ ML models are continually trained to detect new attack MOs based on:

- applied intelligence from the dark web

- stolen credentials from recent breaches

- engagement with threat actors

- reverse engineering attack tools and malware

- attack simulations using Red Team

This threat intelligence is leveraged to select the data and features they will use to train our ML models on emerging threat patterns, techniques and evasion tactics. The model can then be evaluated using synthetic data our researchers create by reverse-engineering attack tools and tested on real user data — enabling them to optimize the model’s detection capabilities to obtain the best possible results.

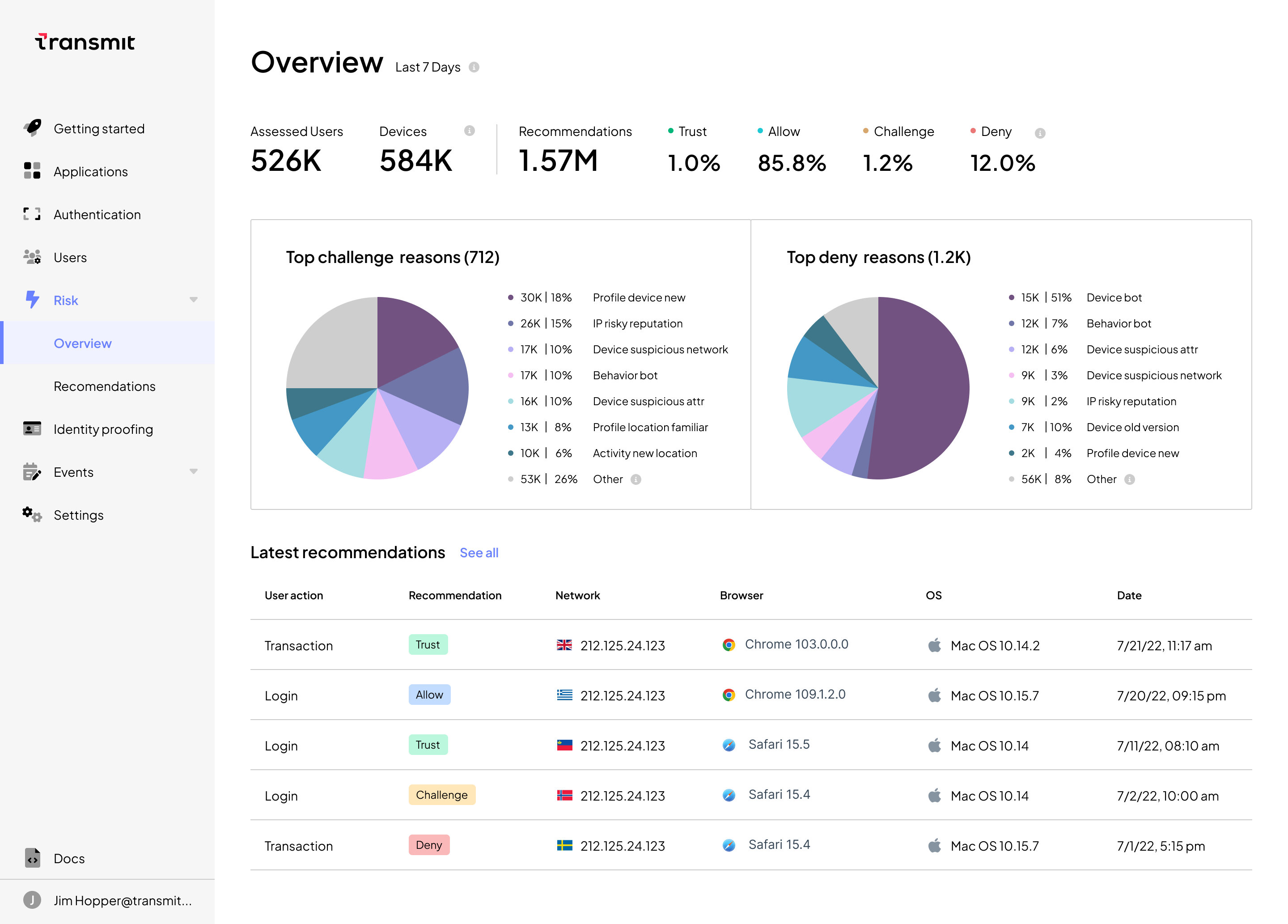

New models are frequently deployed on an ongoing basis so the model can automatically apply its findings to detect emerging threats in real time and orchestrate responses using the service’s recommendations of Deny, Challenge, Allow and Trust as action triggers.

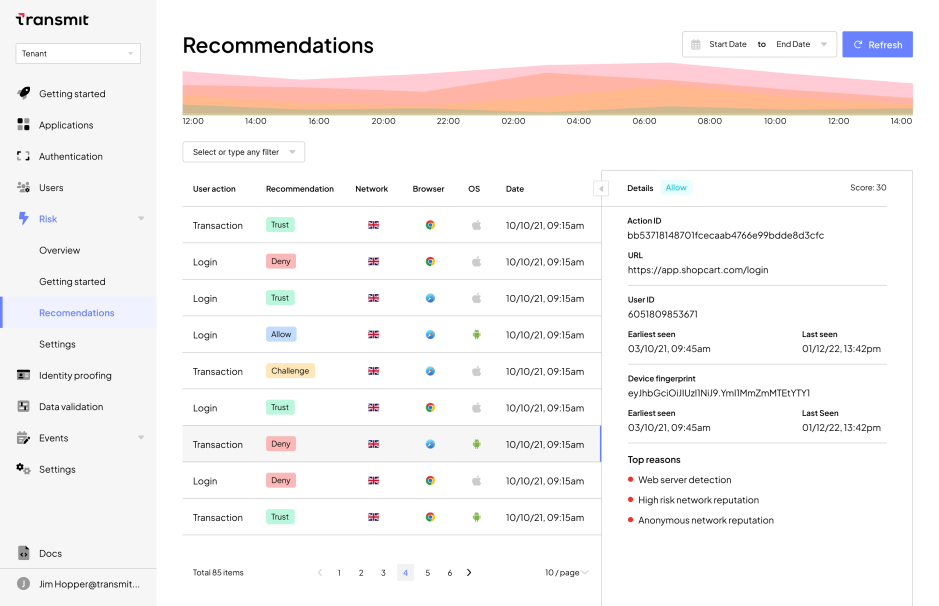

Recommendations are returned via UI and API for each user request of each relevant action, along with the top reasons for the recommendation, as calculated using SHAP values.

Fraud analysts can then use this information in investigations or quickly detect false positives and negatives to tune the model using our Labels API. As patterns of individual user behavior are built over time, businesses gain a higher level of assurance in the user’s risk and trust signals, based on usage patterns such as trusted device fingerprints, known IPs, behavioral biometrics and more.

In addition, SHAP calculations provide global indicators of risk and trust such as bot behavior that are exposed via rich visualizations within the UI dashboard, enabling analysts to quickly view overall trends that may indicate large-scale fraud campaigns, share information across teams and determine the best course of action to quickly adapt to these attack patterns.

Conclusion

Ultimately, businesses have a responsibility to their customers to not only discern trusted users from malicious ones, but to explain and defend their decisions. No matter how accurate a model is, “the algorithm made me do it” is unlikely to satisfy customers who want to know why their account was suspended or their loan application was denied. By leveraging SHAP values in our Detection and Response Services, Transmit Security provides this transparency to users, simplifying the work of fraud analysis, security teams and other stakeholders.

To find out more about Detection and Response, check out this deep dive on our ML-based anomaly detection in action or read the full case study to find out how a leading US bank leveraged our services to gain 1300% return on investment from projected fraud losses and operational cost savings.