As malicious bots become increasingly sophisticated, the differences between human-like bots and trusted users become harder and harder to detect. In this environment, even small differences between human and bot behavior can make a big impact on bot mitigation. One way to discern these differences is through input method analysis. In this blog, we’ll explain what input method analysis is, why it matters, and how it can be used to gain trust for legitimate users and help identify bad bots.

Understanding input methods

Did you know that a web application can discern whether a user typed or pasted their username? Or recognize if they used a password manager? The means by which usernames, passwords, and other data are filled into form fields are known as input methods. Common input methods include:

- Typing in values directly

- Copying-and-pasting values from a list

- Input field text injections by script

- Autofilling fields with usernames and passwords saved in a browser

- Using a password manager to automatically fill in usernames and passwords

Although any of these methods could be used by either a trusted user or an attacker with malicious intent, certain patterns of usage, such as multiple pastes in a short period of time, may indicate suspicious behavior. Input method analysis helps to pinpoint suspicious behaviors by closely examining data entry patterns in both individual users and groups of users — such as humans vs. human-like bots.

Deepening the data

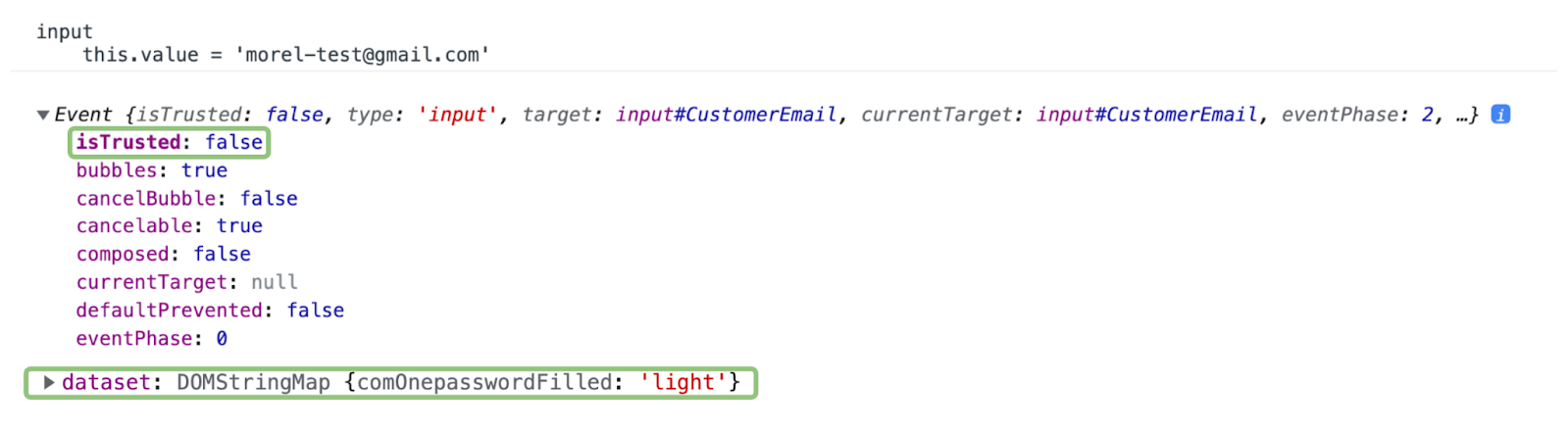

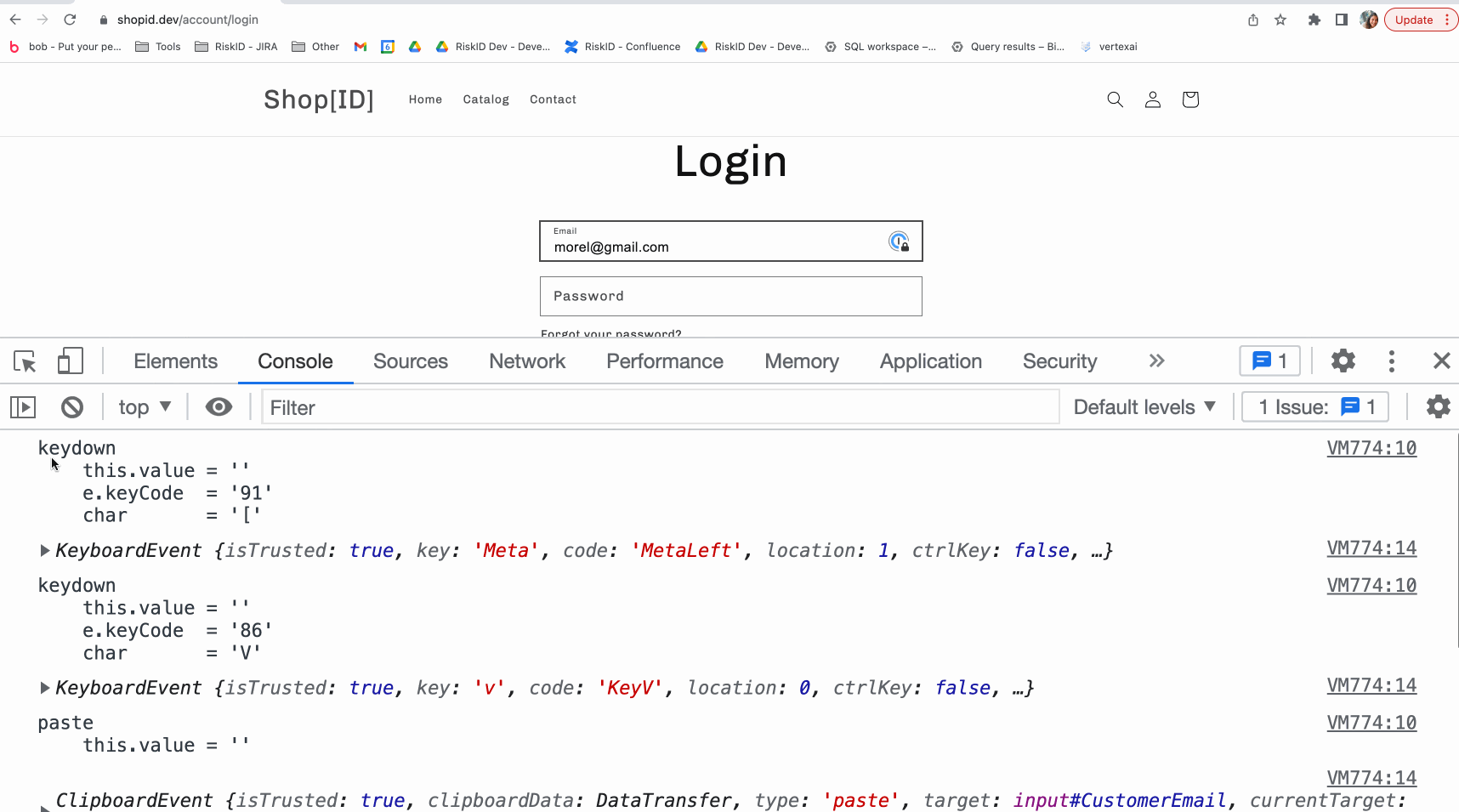

Input methods are identified by analyzing events in the HTML Document Object Model, or DOM. The DOM is a data representation of the objects — such as text, HTML elements and attributes — that comprise the structure and content of a webpage. Actions that occur on that webpage, including user interactions like typing or pasting information into a form field, are registered as DOM events. Below, you can see an example of what a DOM event created by the browser looks like.

When a user enters their username or password into a login form, the input method is identified in the DOM by the input event, which is triggered during interaction with an HTML element. Information describing the interaction is provided by the DOM event properties. For example, the event shown below represents an interaction where the user pasted their credentials into the field. Several event properties — circled in green and outlined below — provide the information needed to deduce the input method:

- The inputType property is insertFromPaste, which indicates an insert operation.

- The isTrusted property is true, which indicates that a user interaction — as opposed to a script — triggered the event.

- The dataset property, which provides additional key-value metadata for elements on the DOM, is empty.

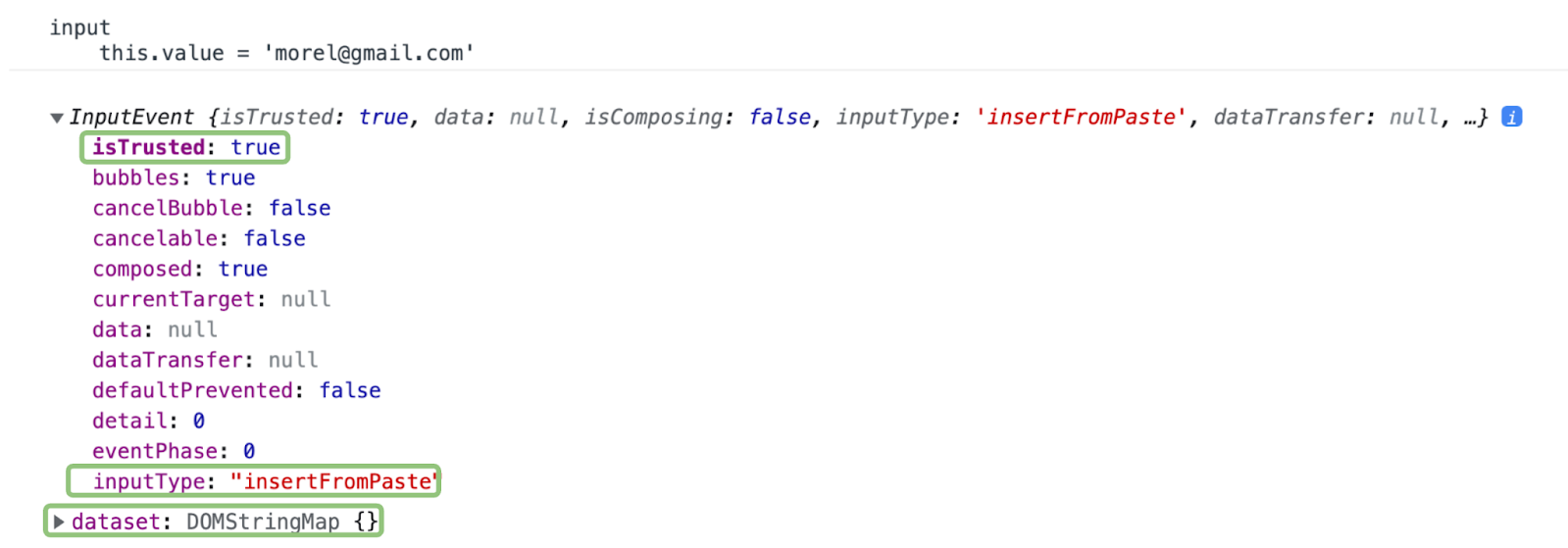

Now let’s see what happens when we use a password manager to fill in the same field:

- The isTrusted property is false because a script performed the interaction.

- The event doesn’t have an inputType property, since inputType only describes events caused by user input.

- The dataset property contains the key-value pair comOnepasswordFilled: “light”, which provides information on which password manager was used.

Detection methods

The data received from the browser as DOM events can be processed by adding tags — or small snippets of code — to specific elements on a webpage, like login forms, to track user interactions based on the input method used. Analyzing this data can help to establish trust in users who consistently use the same input method or to detect suspicious interactions that may indicate bot behavior.

User behavior anomalies

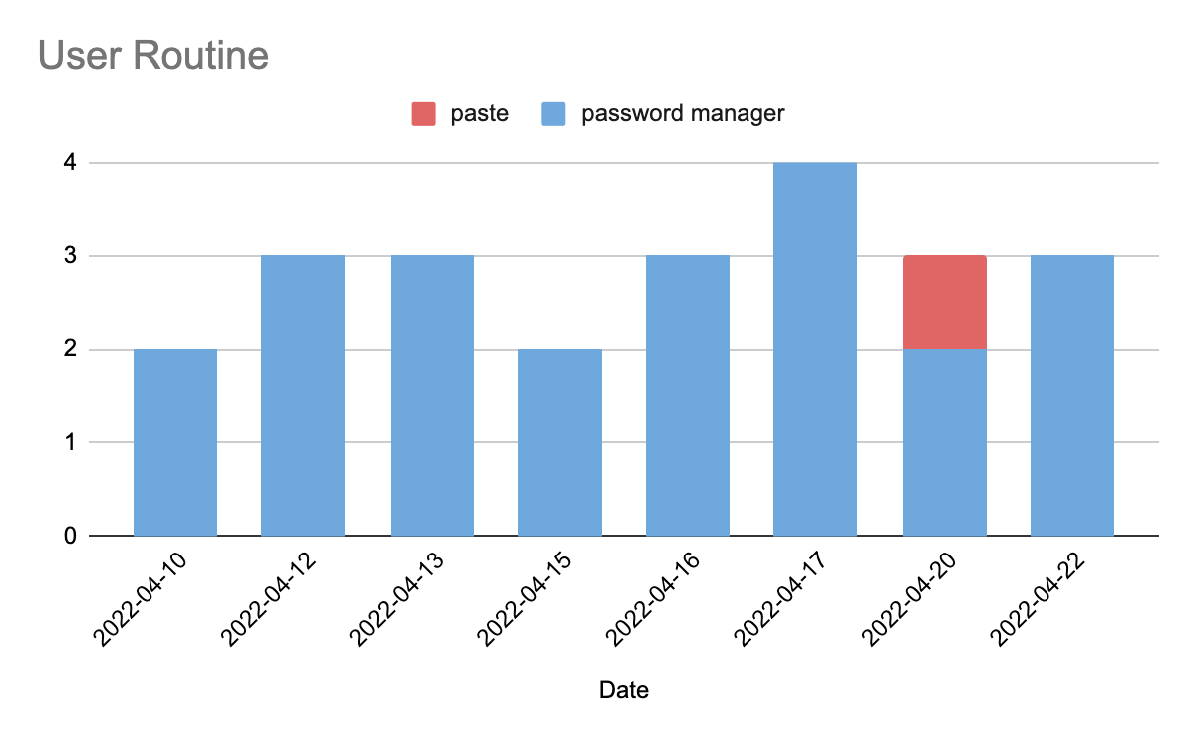

Continuously tagging a user’s input method interaction helps build a profile of their behavioral patterns. By comparing each new action with the user’s historical behavior, we can detect anomalies that can be used to evaluate risk. A visualization of this type of anomaly can be seen in the graph below. Here, a user who consistently autofills their username and password using a password manager has suddenly pasted their credentials manually instead of using the password manager. Detecting this sudden change could be used as evidence of suspicious behavior, especially if it occurred in conjunction with other risk signals.

Typing speed

When values are typed directly into a field, the typing speed can be used to indicate whether the value was entered by a human or a bot. Humans can get distracted when typing, or have difficulty reaching one key over another on their keyboard. As a result, bots are expected to type much faster than humans and have far less fluctuation in typing speed.

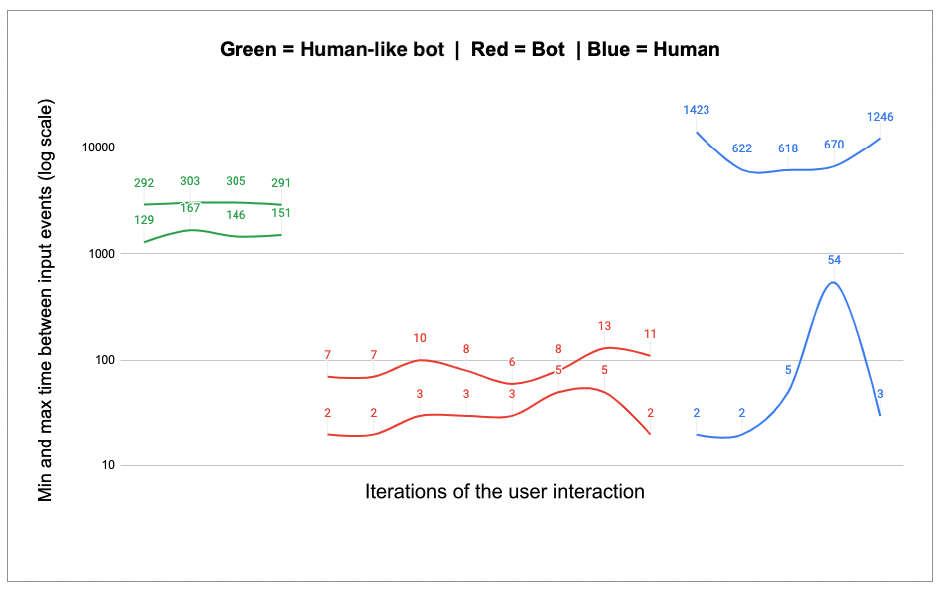

However, malicious bots are increasingly designed to mimic human activity, making them harder to detect. To better delineate between human and bot typing speed, our Security Research Lab analyzed user interactions in three separate use cases: typical bot behavior, human-like bot behavior, and human behavior. Multiple DOM events were reported for each interaction, and each event was given a timestamp. For example, each letter typed when filling a username into a login form might be recorded as a separate, timestamped event — enabling us to see how much time passed between typing each letter. We then calculated typing speed based on the average time between typing events and iterations of user interactions. The fluctuation was determined by comparing the minimum and maximum time between subsequent typing events. The image below shows the range of typing speed for the three use cases, with the two lines representing the maximum and minimum time, in milliseconds, between events.

In the chart, the green lines represent the behavior of a bot that acts like a human. The typing speed (~300ms) resembles human typing, but the low fluctuation enables us to classify it as bot activity. The red lines illustrate typical bot behavior, where the typing speed is very fast (~8ms) and the fluctuation is very low. Finally, the blue lines depict human behavior, which has high fluctuation and is more dynamic in nature.

Advanced bot detection with Transmit Security

Analyzing input methods improves bot detection by finding anomalies in user behaviors and providing more information on the differences between human behavioral patterns and those of evasive, human-like bots. Transmit Security’s Detection and Response service enables advanced bot detection by combining input method analysis with other detection methods, including device fingerprinting, IP reputation, user profiling, link analysis, and velocity checks. Using machine learning to correlate and analyze a broad range of telemetry from these and other detection methods, Detection and Response gives transparent, contextual recommendations that can be used as action triggers to respond to risk and trust signals in real time—for each user, at each moment in the user journey. For more information on Detection and Response, check out the product page on our website.